Giving Feedback on AI Writing Feedback

This was written with Lex, one of many tools we are trying as part of our look at AI writing platforms. If you are working on ways for AI to improve the writing experience — in or out of classrooms — we’d love to hear from you!

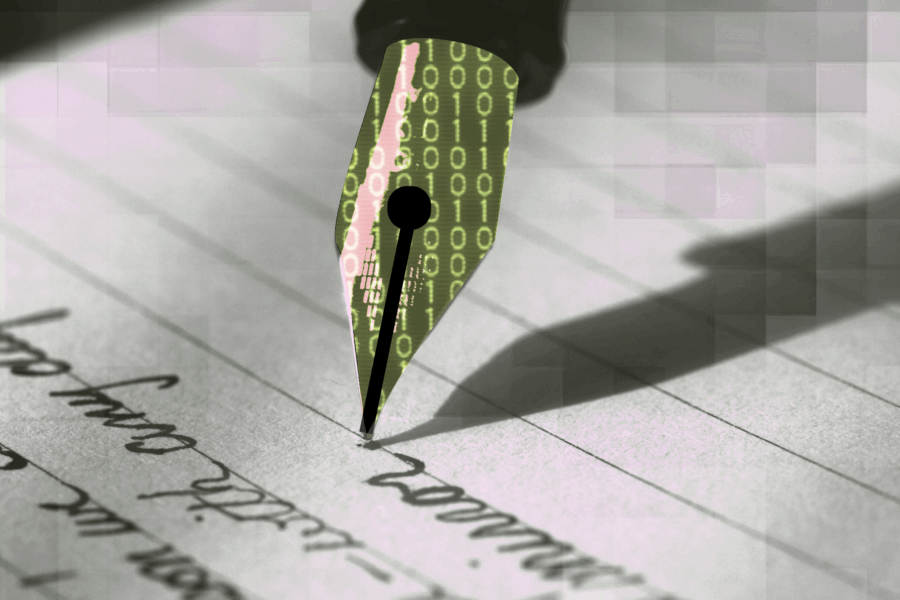

In a world where we use artificial intelligence to produce work that AI will grade and evaluate, what will the future of writing look like, and how will it shape how the next generations teach and learn to write?

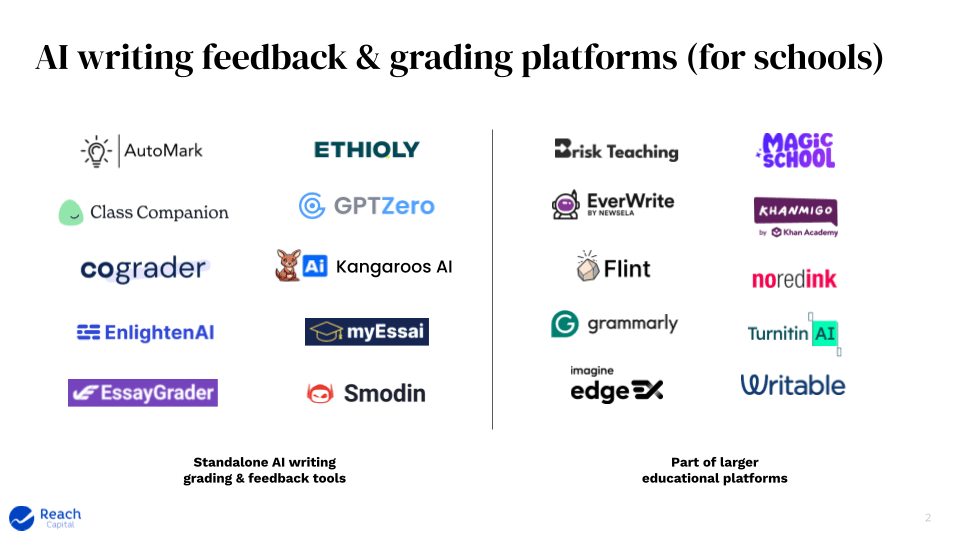

With Generative AI, new grading and feedback tools have emerged for classroom teachers that aim to save them time while helping students become better writers. These are among the most time-consuming tasks for teachers, and thus burdensome pain points that contribute to burnout.

Recent studies suggest AI is becoming as good as an “overburdened” teacher at scoring essays and delivering feedback. Yet for now, there are enough inconsistent results to give some pause. Especially for young writers, personalized feedback must consider their background, knowledge, and experiences — things AI will struggle to understand better than teachers.

From the pen to the printing press to typewriters and computers, technology has continually transformed how we write and learn to write. As AI is woven into writing and feedback, how can these tools provide meaningful assistance and actionable insights, while preserving room for students and teachers to foster relationships that support their growth as writers?

Observations on AI Writing Feedback

One study from The Learning Agency found that ChatGPT grades similarly to a human in assigning a final score, but struggles at identifying specific building blocks of an argumentative essay.

We tested five new AI essay graders designed for school use with two writing samples: a 12th grade argumentative essay on the rise of Caudillismo in 19th century Latin America from Gabe’s history class and an 8th grade narrative sample about life during the Dust Bowl, from the Vermont Writing Collaborative, a nonprofit founded by public school teachers focused on writing instruction.

Here’s what we observed:

The scores given by the AI graders vary widely. Given the same assignment, essays and 15-point scoring rubric, the final score assigned ranged from 9 to a perfect score. Even where the comments and feedback were similar, there was a diversity of scores across the five tools.

Of course, how humans interpret and grade based on a rubric is also subjective. Thankfully, these tools do allow for teachers to review and adjust the grades. Several tools say their AI grader can be trained on these changes to improve how they grade future assignments. Class Companion, one of the tools we tested, also allows students to dispute the score and feedback given by AI, which opens up an opportunity for constructive and critical review of the issue with the teacher.

How actionable is the feedback? Does it know enough about the subject matter to suggest specific ideas and topics for further consideration?

In general, we found AI writing graders can competently evaluate and deconstruct the mechanical elements of writing (grammar, tone, diction, syntax) and the structural components as set by the rubric (supporting evidence, logical construction). But when the feedback touched on specific subject matter, we found it can lack the depth of specificity that a teacher might offer. This was true of both assignments, where some of the critical feedback felt surface level — “explore more deeply” without specifying further where or how to look.

We believe there are opportunities to connect them to the other readings, syllabus, lesson plans and other resources used in the classroom that could help fill the context gaps. As AI grading increasingly becomes a feature of AI teacher “copilots,” the larger vision is that feedback can incorporate the broader classroom environment beyond just the assignment itself.

Larger edtech platforms are moving in this direction. Newsela (a Reach portfolio company) recently launched an AI teaching assistant for student writing. Earlier this month, Khan Academy and Imagine Learning announced similar tools within the same week. Several AI graders we tested also white-label their tools to other edtech platforms, and we expect to see more of these partnerships.

How appropriate is the feedback? In order for it to be actionable, it also has to be communicated in the right way. In other words, does this feedback make sense to an eighth grader? Is the tone encouraging or clinical?

Most of the tools we tested adapted the language of the feedback to the grade level. For the 12th grade assignment, the comments were noticeably more academic and substantive than those for the 8th grade writing sample. While the latter assignment received shorter, pared-down feedback, it was still enough to provide directions for revisions.

In organizing the feedback, many of the tools adopted tried-and-true best practices, such as the compliment sandwich or “glow and grow.” There were some rare instances that seemed unnecessarily harsh; one comment stated “Your analysis is quite shallow” without substantiating why, which felt discouraging and borderline disparaging.

The quantity of feedback also varied. Some tools summarized everything into one summative paragraph, while others offered more line-by-line comments akin to a Google doc. A few over-dialed on the feedback, in our opinion; when every sentence is flagged for potential improvements, it felt jarring and counterproductive.

Several of the tools touted that their AI systems can learn from the tone as teachers modify the feedback. We did not test this feature but this is a promising avenue to help align the style of the AI feedback to be closer to the teacher’s. At the end of the day, teachers need to be in the loop.

Further Opportunities

For AI to meaningfully transform how we teach and learn writing, their development must be grounded in a deep understanding of pedagogy and human development. After all, writing is not just a test and reflection of knowledge and thinking, but also an extension of our identity and voice. It is a journey of growth, rather than a shortcut.

Based on research on writing as well as our experiences as writers, here are a couple of principles worth keeping in mind.

Successful writing experiences often involve working through frustration. To the extent that AI can help students untangle mental knots on their own instead of solving the problem for them, it creates a more impactful and delightful experience. This “productive struggle” is a formative part of all learning experiences, yet finding this delicate balance has long been the domain of teachers and coaches who have deeper understanding about the learner and know how to provide appropriate scaffolds. Where can AI draw this information from? Can AI capture the struggle in real time as we write, delete and rewrite?

Feedback is social. Our exercise for this post focused on the quality of AI feedback. But it can feel extra meaningful when it comes from our peers. Research on peer feedback found that it leads to more revisions and stronger performance on assessments. Furthermore, when students critique each other’s writing, they develop their “editor’s voice” that they can apply to their own work. Can AI help facilitate and make it more of a peer-led, social experience in the classroom?

Tony Wan was formerly the managing editor at EdSurge. Gabe Hawkins is a rising freshman at Northwestern University, pursuing a major in journalism. He has previously written for The Nueva Current, the official student newspaper at The Nueva School.