Why LLMs Are Bad at Math — and How They Can Be Better

“What is computation?”

When Stephen Wolfram, CEO and Founder of Wolfram Alpha was asked by Lex Friedman on his podcast, his response was simple: “Computation is following rules. That’s kinda it.”

Symbolic computation systems such as Wolfram Alpha, Maplesoft, Mathematica do exactly this: they follow pre-set rules defined by the software. They are deterministic. These rules-based systems are predictable and quite accurate for solving arithmetic, geometry, algebra and calculus, which is why they are loved by students and professionals around the world.

With AI and large language models (LLMs), many new tools have emerged as the next generation of education technologies. In 2023 nearly 1 in 5 students who had heard of ChatGPT used it for help with their schoolwork, a number that has likely grown since. For a student looking for help writing their history essay and formatting their citations properly, an LLM-based study tool might prove helpful and save them time.

But for a student crunching on algebra homework, an LLM-based study tool might fail them. It’s been widely reported that these systems struggle with math.

Why is this new technology so bad at simple computation that decades-old tools have succeeded at? And what place does it have in the new generation of math tools that shape how future generations learn and understand the subject?

How do LLMs work today and why do they struggle with math?

In order to understand why LLMs struggle, we need to first understand how LLMs work to process and predict natural language. LLMs are “stochastic parrots,” a term coined by researcher Emily Bender, to describe that while LLMs can generate language that sounds convincing to a human, they don’t understand the meaning of the language they are “parroting.” And unlike symbolic computation systems, LLMs are non-deterministic, meaning that for the same input, the model won’t necessarily yield the same output every time.

Even with these limitations, LLMs have proven to be proficient in writing given their ability to effectively predict the next word in a sequence. For example, if you had to fill in the blank for the sentence “The batter hit the ball out of the ____ ”, there is a high likelihood you would guess “park.” You are trained on the data of your lived linguistic experience, and LLMs are trained on all of the writing on the open internet.

Viewing math like a language at times can yield accurate results. But LLMs don’t actually fundamentally understand the mathematical concepts that underpin the calculations. If I were to ask an LLM “what does 1+1 equal,” there is a good chance that it would guess the right answer — not because it truly understands addition, but simply because it has likely seen the equation before and can guess the right answer. Less conventional — but still basic — computation questions may yield varied results.

Another reason LLMs struggle with math is that they are usually trained on the open internet, where math content lacks well-labeled and structured math data. Mathematical expressions require specific notation and symbols, but this data is frequently not structured in a way that is easily digestible for LLM training. Hence, they often do not understand the meaning behind mathematical symbols.

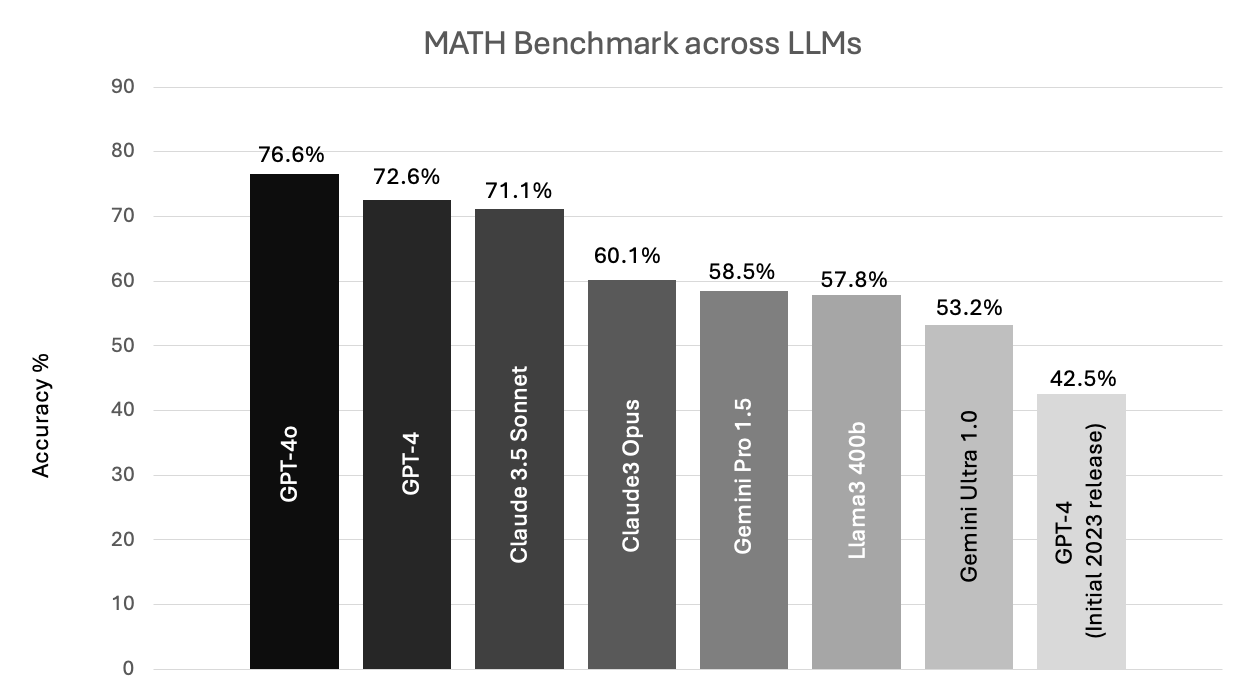

When testing LLMs on their math ability, two benchmarks are commonly used. The first, MATH, is a benchmark of high-school level math problems. The second, GSM8K, is a benchmark composed of grade-school level math word problems. While these benchmarks certainly don’t account for the entire math universe, they are helpful when measuring LLM performance to date. According to some of the latest benchmarks released by OpenAI, ChatGPT-4o is the leader for the MATH benchmark at 76.6% accuracy, closely followed by Claude 3.5 Sonnet.

A caveat: While there are universal LLM benchmarks such as MATH, there is no third-party standards body that does the actual testing. For now we rely on LLM creators themselves to test the model against the benchmark, as in the case of OpenAI releasing many of the figures below. Still, they are helpful in providing directional differences across LLMs, even if the actual “Accuracy %” benchmark measure for the same LLM can look different across different studies in the academic community.

What are strategies for making AI better at math?

The industry is attempting to improve LLM math performance in a few novel ways.

The first solution is reinforcing the process, not the outcome. Instead of focusing on the right answer, this model training approach focuses on the steps needed to get there. This distinction is known as “process supervision” versus “outcome supervision” as defined by OpenAI. The former involves providing feedback on each specific step in a given chain of thought. On the MATH benchmark, models that were process-supervised outperformed the outcome-supervised models.

The second solution for improving math performance is integrating LLMs with Symbolic Computing Systems so that users can get the best of both worlds. They can access the language understanding capabilities of LLMs that can break down word problems and provide step-by-step explanations, as well as the benefits of the rules-based structure of symbolic computing systems which yield higher accuracy.

This can functionally operate like the ChatGPT + Wolfram plugin, or by actually inserting these deterministic “rules” directly into the model’s reasoning process. A joint study by Tsinghua University and MIT found that Tool-integrated Reasoning Agents (ToRA) training yielded higher accuracy (51%) on the MATH benchmark versus ChatGPT-4 (43%). Despite advantages from tools integration, the researchers noted that LLMs still struggle with aspects of math such as geometry, given their lack of spatial understanding and advanced algebra.

Another approach to improve LLM math performance is being able to capture differentiated data that can be used for training. This might look like feeding a textbook-style explanation of a math problem into an LLM so it can learn the steps needed to arrive at the solutions.

Getting customer or user-generated data is also advantageous. AI-led math study tools such as Photomath capture such data from students who take photos of their math word problems and upload them for AI to parse and propose a solution. This increased volume of problems that the AI is exposed to, and reinforcement from a user on whether the explanation of steps was helpful, can further improve the model’s accuracy and ability to explain the steps. (This approach is known as Reinforcement Learning from Human Feedback, or RLHF.)

One company taking a multi-pronged approach is Thinkverse (a Reach portfolio company), with an LLM layer that supports with step-by-step problem explanation, a symbolic computation layer that ensures that solutions are accurate, and an Optical Character Recognition (OCR) layer that extracts math equation text from images and scanned documents and allows for multi-model content ingestion.

AI-powered math tools in the market today

Today there are many AI-powered math study tools on the market: Thinkverse, AI Math, Studeo, Photomath, Mathpix (another Reach Capital portfolio company), Gauthmath, Answer.ai, Thetawise, Mathful, and Sizzle are just a handful of examples. These subject-specific tools, which are often built on the latest LLM version of ChatGPT or Claude, aim to differentiate themselves with a student-friendly interface and experience. Thetawise, for instance, allows for multi-modal content ingestion (e.g., students can upload raw handwritten notes or a photo, or speak verbally). Prices range from free to upwards of $20 per month.

For anyone in this space looking to build a sustainable business from charging students, the quality of experience will need to be significantly better than the bar set by the free version of ChatGPT. When pricing these products, companies should also consider that a significant number of potential early adopters may already be paying for the premium version of ChatGPT ($20/month) which includes access to one of the top LLMs for math (ChatGPT-4o) and the Wolfram + ChatGPT plugin (available for free).

One emerging leader in the LLM for math space is Mathpresso, who reaches 10 million monthly active users across South Korea, Japan, Thailand, and Vietnam. Mathpresso created MathGPT, its own math-specific LLM that powers many of its apps. Co-developed by Mathpresso, telecom giant KT and AI startup UPstage, this LLM claims to use customer-generated, proprietary data and synthetically created data to outperform others on the market, including Microsoft’s ToRA and GPT-4 on the MATH and GSM8K benchmarks. Although MathGPT has recently been surpassed by GPT-4o and Claude 3.5 Sonnet, it is still an impressive feat that a startup has been able to compete with incumbents with an LLM made from scratch.

Reach out!

We have some ways to go before LLM-based math tools can be accurate enough to be reliable and trustworthy. Weaving in symbolic computing systems can help. Nonetheless, LLM-based AI study tools hold promise to be a powerful learning aid. Instead of simply crunching numbers, they can help process messy math problems (in both the natural language and the natural world) and aid in the communication needed to scaffold and explain the steps towards the solution. This will accelerate learning across the board.

If you are working on new AI paradigms to progress math learning and understanding, or are exploring what’s possible with LLMs in the future of math education, we’d love to hear from you!

Thank you to Thinkverse co-founders Vincent Zhang and Sean Qiyi for sharing their perspectives. And to Tony Wan and James Kim for the review and edits.

Viraj Singh is a Summer Associate at Reach Capital and incoming MBA/MA in Education candidate at Stanford. Prior to Reach, he worked at Avataar, a Series B AI startup, was a consultant at Bain & Co, and teaching fellow with Breakthrough Collaborative where he taught 8th graders physics.