“The College Essay Is Dead.” So declared Stephen Marche in The Atlantic, about how the time-honored tradition will be “disrupted from the ground up” by ChatGPT, an AI chatbot capable of generating college-level written responses.

What the sensational headline masks, though, is the author’s hope that this is also an opportunity to rethink cultural norms in academia, in particular the longstanding divide between the humanities and STEM. In an age where AI can emulate humans, and where natural language processing is the future of language, Marche writes, humanists and technologists “are going to need each other” more than ever. There is more at stake than homework.

Usually there’s a lag period between the emergence of new technology and its recognition and adoption in education. What is remarkable with ChatGPT and GPT-3 is the rapid uptake by people across the community. Students are leading the charge, using it to write papers (so long, paper mills!) and stirring cries of cheating among teachers. Other educators have experimented with it to create syllabi and grading rubrics and champion its creative potential.

Technologists, naturally, are ecstatic over the possibilities unleashed by GPT and other new AI models that are capable of generating writing, art, music, games, code and other creative output that rivals, if not exceeds, human imagination and creativity. Out of curiosity, I created my own GPT-3 bot, trained on over 700 of my EdSurge articles, that can generate articles that sound uncannily like me. (Unfortunately, it’s all fake news because GPT-3 makes up facts.)

More serious efforts are underway to leverage new AI models for studying, writing, reading, and other disciplines. That Holy Grail of an AI tutor that’s just as good as a human? Suddenly it seems closer within reach.

In our portfolio, we’ve seen firsthand how groundbreaking developments in AI, coupled with thoughtful design and implementation, can enable and scale impactful educational experiences. Tools like TeachFX (voice AI for teacher feedback), WriteLab (writing feedback; acquired by Chegg), Mainstay (student support) and Gradescope (grading assistant; acquired by Turnitin) are now category leaders that have reached millions of students and teachers.

Today, new businesses are being built entirely around new AI models, while established incumbents are also using it to expand existing offerings. This new wave of “generative” AI will not only give rise to new edtech market leaders, but also challenge longstanding practices and beliefs in teaching and learning.

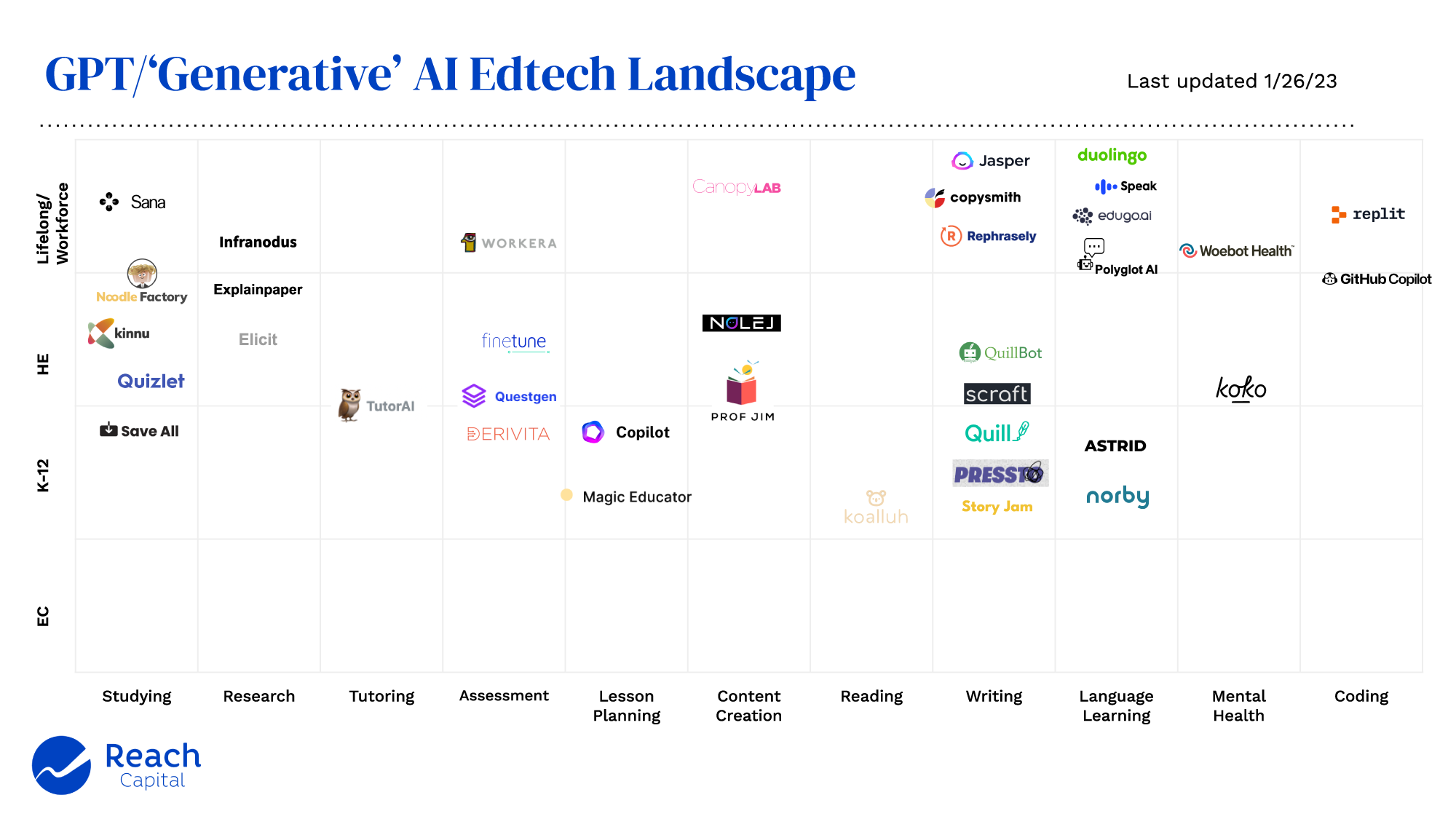

GPT and ‘Generative’ AI in Edtech

Below is our survey of edtech companies that are leveraging GPT and other “generative” large language AI models. For some companies, AI is core to the product offering and user experience. For others, it operates more in the background, as a support or peripheral feature that is only part of the overall product offering.

This is not an exhaustive list; let us know if you know of others to add to the map!

Here are a few broad categories where generative AI is emerging in the edtech market:

Content, Content, Content

This is an obvious use case for AI that can generate stories and art with infinite possibilities, and new startups are applying this to educational content. Prof Jim turns traditional textbook content into rich multimedia experience and courses; Koalluh combines GPT-3, DALL-E and other LLMs to help kids create books they and their friends want to read. Magic Educator help educators create lesson plans and other instructional support materials.

Other, more established companies are using AI to generate content in service of the existing core offerings. Duolingo uses GPT-3 for French grammar corrections and to generate items for their English test. Workera uses it to generate question items for their skills assessments.

With new, AI-powered editing tools like Descript and Runway, it will be easier than ever for people to produce their video and interactive digital courses, at reduced time and cost. Not long ago, MOOCs cost upwards of $300K and several months to make. As courses get outdated quickly, these tools will also make it much easier and cheaper to update materials.

Immersive, Personalized Experiences

For language learning apps, one of the hardest parts for technology to replicate has been speaking practice that feels like natural conversations. But with GPT-3 and voice LLMs, companies like Speak and Polyglot now make this possible.

We can expect to see new AI models applied to other areas where interaction and real-time feedback is valuable for learning: coaching, editing and tutoring. Writing tools like Quill and QuillBot already use AI to offer basic feedback on student writing; it may not be long before the quality and range of feedback is on par with a teacher or newsroom editor. To many, ChatGPT’s ability to answer (most) questions far surpasses the dozens of AI tutors that came before it. Key to this will be fine-tuning AI on unique data sets that are specific to the subject domain or instructional experience.

AI is also enhancing social interactions and learning as well. In gaming, AI Dungeon uses AI as a “dungeon master” who can generate an infinite array of storylines. In education, companies like Hidden Door and Story Jam offer kids AI-guided collaborative experiences to explore and write stories together.

Creative Partner and Co-creator

Run out of ideas? Don’t know how to best express it? In marketing copywriting, there are many AI tools like Jasper that help people overcome writer’s block (and, in many cases, will write the entire posts.) In education, apps like Scraft help students brainstorm ideas and outlines for essays, and surface informational resources to substantiate those ideas.

Not all AI ideas are good. Some are stale, offensive and outright inappropriate. But even an inkling of a new idea—something only 1% good—can spark creative juices and help us hone them until we get what we want.

AI offers not just ideas, but suggestions and instructions as well. That, combined with low/no-code software development tools, is already fueling new apps and programs. People are asking ChatGPT to write code for app ideas, which are then copied and built on Replit. This will make software development dramatically more accessible and easier to deploy. From websites to chat apps and solar power output calculators, this will take project-based learning to a whole new level.

AI Literacy and Ethics

As AI becomes woven into everyday life and work, in ways subtle and obvious, AI literacy will be ever more important. In a world of AI-generated ideas and content, critical thinking and AI literacy will be important to discerning quality and truth amid a deluge of stuff. How will we teach kids to use AI? When should they be introduced to these tools? Who will review materials and set up guardrails against inappropriate content? Teachers, too, will need support.

While a growing number of schools teach computer science, AI curricula is virtually nonexistent. Gwinnett County school district is reportedly the only U.S. district to teach AI as part of its curriculum. Expect outside industry partners to play a role. One provider, Mindjoy, runs workshops in schools around the world to introduce students and teachers to GPT-3 and new AI tools.

What Mindjoy organizers found, perhaps unsurprisingly, was that kids often asked if AI could do their homework. This is one of the biggest flashpoints in education circles: Is this cheating? Can we cite AI as a source or reference? Should we ban its use on assignments and tests? (There are rumors that ChatGPT developers are working on watermarking AI-generated output.) How will prevailing standards of academic integrity and honesty change and be enforced? After all, what is the value of assigning work that can be done with AI?

It’s an uncomfortable, but not unprecedented, question. Google and Wikipedia once raised this concern, and challenged us to make more meaningful assignments and assessments. Calculators did not extinguish the value of teaching computation and foundational mathematical reasoning.

Ben Thompson of Stratechery (himself a parent of a school-age child) proposed the idea of “Zero Trust Homework,” which is less about having students find the right answer and more about evaluating and explaining wrong or partially wrong answers deliberately pushed to them. “The real skill in the homework assignment will be in verifying the answers the system churns out — learning how to be a verifier and an editor, instead of a regurgitator,” he writes.

AI also raises equally serious implications for the future of writing and, for that matter, thinking. After all, writing is the most brutal form of putting our thoughts to the test, since the process often reveals inconsistencies and fallacies in our train of thought. Jane Rosenzsweig, director of the Harvard Writing Center, asked: “If AI is doing the writing, then who is doing the thinking?

Others have framed the debate as an existential battle between two AIs: artificial intelligence versus academic integrity. But it doesn’t need to be so combative and oppositional. More likely, they’ll evolve together as kids, teachers and technologists uncover new ways to teach, learn, think and build with AI.

This rapidly developing new generation of AI will beget many more questions and attract many more builders with ideas to tackle them. We welcome everyone to contribute to this ongoing dialogue. And of course, if you are building in this space, reach out to tony [at] reachcapital [dot] com.

Special thanks to James Kim and Frank Catalano for reading earlier drafts.